Day 1: ReactJS Application for generating script lines using AWS Polly.

Challenge: Dive into a specific cloud service you're less familiar with & explore its advanced features.

Technologies used:

- AWS Polly (The unfamiliar Technology)

- AWS Lambda URL function

- NextJS/ ReactJS

For this challenge I decided to learn AWS Polly. I used the AWS Polly speech synthesis API via an AWS lambda URL function & wrote a NextJS application to handle the UI to create lines & then make API calls to generate the synthesized speech and play the lines in sequence to generate an audio performance of the script.

Video Demo

Challenges Encountered

UI Issues and State Management for Sequential Playback

Handling audio playback in sequence can indeed be challenging, especially in a UI context where user interactions may affect the playback flow. Using a linked-list approach to manage audio events and ensuring that the next audio event triggers only after the current one completes playing is a clever solution. This approach maintains a clear sequence of audio playback while allowing for flexibility in handling user interactions and state changes.

CORS Issues with AWS Lambda for Audio Synthesis

Dealing with CORS (Cross-Origin Resource Sharing) issues, particularly when working with AWS Lambda functions for audio synthesis, is a common challenge when integrating backend services with frontend applications. By referring to documentation and leveraging your existing knowledge of HTTP headers, you were able to address this challenge effectively. Adjusting CORS settings on the Lambda function and ensuring that the appropriate headers are included in HTTP requests can help resolve CORS-related issues and enable seamless communication between your frontend application and the Lambda function.

Sending Binary Audio Data as a JSON String

Handling binary data, such as audio files, in a JSON response from an AWS Lambda function can indeed be tricky due to limitations in JSON's data types. Converting the binary audio data to a base64 string is a common solution to this problem, as base64 encoding allows binary data to be represented as ASCII text, which can then be included in a JSON response.

By using a base64 decode function in your Lambda function to convert the binary audio data to a base64 string, you were able to include the audio data directly in the JSON response. Since JavaScript can handle loading audio from a base64 string, this approach is suitable for your needs and allows for seamless integration with your frontend application.

This solution demonstrates a practical approach to working within the constraints of the AWS Lambda environment and effectively leveraging base64 encoding to transmit binary data in JSON responses. It also underscores the importance of understanding the capabilities and limitations of different data formats and technologies when designing and implementing solutions in a distributed system architecture.

Known Issues/ Future Development

As I continue to refine my application, it's important to acknowledge and address some of the current known issues. Two significant challenges stand out: the absence of user authentication and security concerns surrounding the Lambda function URL endpoint. While my project holds promise as a potential portfolio piece, these major issues must be resolved before it can be considered a shippable demo.

User authentication is a critical aspect of any application, ensuring that only authorized users can access its features and data. Without proper authentication mechanisms in place, my application lacks the necessary safeguards to protect user information and maintain data integrity. Implementing robust user authentication will not only enhance the security of my application but also provide users with a seamless and personalized experience.

In addition to user authentication, I must also address security concerns surrounding the Lambda function URL endpoint. This endpoint serves as a crucial component of my application, facilitating communication between the frontend and backend systems. However, without adequate security measures, it poses a potential vulnerability that could be exploited by malicious actors. Strengthening the security of the Lambda function URL endpoint is paramount to safeguarding my application against external threats and ensuring the confidentiality and integrity of my data.

While these issues present significant challenges, they also represent opportunities for growth and improvement. By prioritizing the implementation of user authentication and enhancing the security of the Lambda function URL endpoint, I can take significant strides towards transforming my application into a shippable demo. Addressing these key concerns will not only bolster the overall quality and reliability of my project but also demonstrate my commitment to delivering secure and user-friendly software solutions.

As I work towards resolving these known issues, I remain dedicated to the ongoing development and refinement of my application. With careful planning, diligent effort, and a focus on best practices in software development, I am confident that I can overcome these challenges and deliver a polished and impactful demo that showcases the full potential of my project.

Day 2: ReactJS Application w/ Native Python Backend

Challenge: Architect and deploy a multi-tier application using cloud-native services (e.g., web server, database, caching layer).

For today's challenge I decided to do a simple approach and make a simple deployment. A two server deployment where the front-end, a NextJS Application and a Native Python API backend.

Technologies used:

- EC2

- Route 53

- Elastic IP

- Custom Security Groups

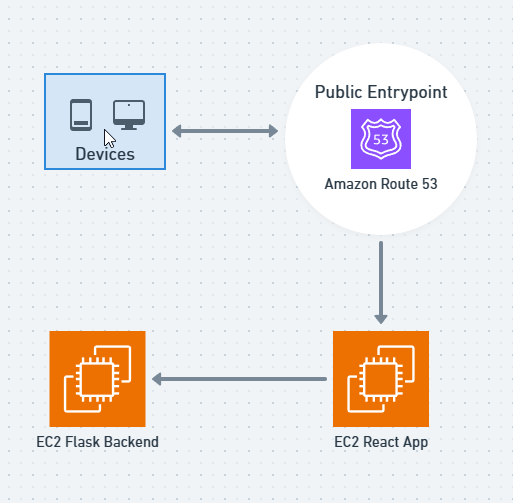

The deployment of this was relatively simple, here's the architecture diagram.

As you can see, it was pretty simple. Here's the component breakdown:

- NextJS React PM2 Deployment on Ubuntu Image

This deployment is a simple NextJS React app with a single page that has a welcome message and a single button to do an API request to the backend to verify the deployment is working correctly and the servers can talk to each other.Here's the site's Front End

- Native Python Backend on Ubuntu Image

Here's literally the entire backend in this one code-block.

I wasn't kidding when I said this was a simple native back-end. 😅

from http.server import BaseHTTPRequestHandler, HTTPServer

import json

hostname = 'localhost'

port = 8000

MSG: str = 'Hello, world!'

MSG_ENCODED: str = json.dumps({'message': MSG}).encode('utf-8')

class WebServer(BaseHTTPRequestHandler):

def do_GET(self):

self.send_response(200)

self.send_header('Access-Control-Allow-Origin', '*')

self.send_header('Content-type', 'application/json')

self.end_headers()

self.wfile.write(MSG_ENCODED)

if __name__ == '__main__':

webServer = HTTPServer((hostname, port), WebServer)

print(f'Server started http://{hostname}:{port}')

try:

webServer.serve_forever()

except KeyboardInterrupt:

pass

webServer.server_close()

print(f'Server stopped http://{hostname}:{port}')The Application

The application is just a simple test of the deployment, the front end has the button that when pressed, does an API call to the backend that then returns a message that is then displayed in the application. Demo below:

Goes from this:

To this:

To this:

Deployment Difficulties

Navigating the intricacies of deployment can be a daunting task, especially when encountering unexpected hurdles. Recently, while working on the front end of my project, I found myself facing challenges in getting the Node.js deployment to run smoothly as a systemd service on Ubuntu. Despite investing considerable effort into troubleshooting and tinkering with configurations, I hit roadblocks that seemed insurmountable.

After exhausting various avenues, I decided to pivot and explore alternative solutions. It was then that I stumbled upon PM2, a process manager for Node.js applications. With its reputation for reliability and ease of use, PM2 appeared to be the go-to choice for many developers. Embracing this industry standard, I opted to leverage PM2 as the daemon to manage my Node.js deployment.

The transition to PM2 proved to be a wise move, as it offered a seamless and efficient deployment experience. Its intuitive command-line interface and robust feature set swiftly addressed the issues I had encountered. Within no time, my Node.js application was up and running smoothly, thanks to the reliability and flexibility of PM2.

Interestingly, while the front-end posed its set of challenges, the backend, powered by Python, experienced no such deployment hurdles. The systemd service approach worked like a charm with the Python backend, requiring no modifications or adjustments. This stark contrast underscored the nuances and idiosyncrasies inherent in deploying different technologies, highlighting the importance of adaptability and a willingness to explore alternative solutions.

Security considerations

Securing the backend infrastructure emerged as a paramount concern in my recent development efforts, prompting a meticulous evaluation of potential vulnerabilities and corresponding mitigation strategies. Given the urgency of bolstering the backend's defenses, I opted for a pragmatic yet effective approach to fortify its security posture.

Central to this endeavor was the implementation of stringent access controls to restrict unauthorized access to the backend services. Recognizing the criticality of this task, I made the strategic decision to exclusively permit connections from the frontend server, effectively establishing a trusted communication channel between the frontend and backend components. This involved configuring firewall rules and access policies to enforce a strict "deny-by-default" stance, ensuring that only authenticated requests originating from the frontend server were granted access to the backend.

Moreover, recognizing the pivotal role of SSH access in managing the backend infrastructure, I further fortified security measures by narrowing down SSH access exclusively to the frontend server. This granular approach not only minimized the attack surface but also bolstered the overall resilience of the infrastructure against potential exploits.

A notable enhancement to the SSH access mechanism involved implementing a multi-tiered login pipeline. By requiring users to first remote into the frontend server before establishing an SSH connection to the backend server, I introduced an additional layer of security that effectively obscured the presence of open ports from external reconnaissance efforts, such as port scans. This clever maneuver thwarted potential adversaries from detecting and exploiting vulnerabilities in the backend infrastructure, thereby enhancing its resilience against unauthorized access attempts.

While these measures provided immediate relief in bolstering the backend's security posture, I acknowledge the need for continuous refinement and exploration of more robust security mechanisms. Looking ahead, I am keen on exploring the implementation of a Virtual Private Cloud (VPC) to further isolate and protect the backend infrastructure, ensuring a comprehensive and resilient security framework. Despite the time constraints encountered, I remain committed to prioritizing security and implementing robust measures to safeguard the integrity and confidentiality of the application's data and operations.

Day 3: Exploration of fault tolerance

Challenge: Implement high availability and fault tolerance measures for your cloud infrastructure.

Some of this may have been expanded by LLMs, don't roast me too bad 😅. Thanks for reading, come back for the rest of the days.

- Whittington